Kubernetes cluster external access

If you want to control the cluster from an external machine, you have to execute the following commands from the master instance.

First, access the master instance and configure the "config" file. This is described in the final section of the guide here.

In your user's home directory, execute the following command:

kubectl -n kube-system get configmap kubeadm-config -o jsonpath='{.data.ClusterConfiguration}' > kubeadm.yaml

The "kubeadm.yaml" file should be created in your home directory. It should look like this:

apiServer:

extraArgs:

authorization-mode: Node,RBAC

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: k8s.gcr.io

kind: ClusterConfiguration

kubernetesVersion: v1.17.17

networking:

dnsDomain: cluster.local

podSubnet: 192.168.0.0/24

serviceSubnet: 10.96.0.0/12

scheduler: {}Then edit the "kubeadm.yaml" file with an editor such as vim or nano. Adds the following lines:

apiServer:

certSANs:

- "<public_api_url>"

extraArgs:

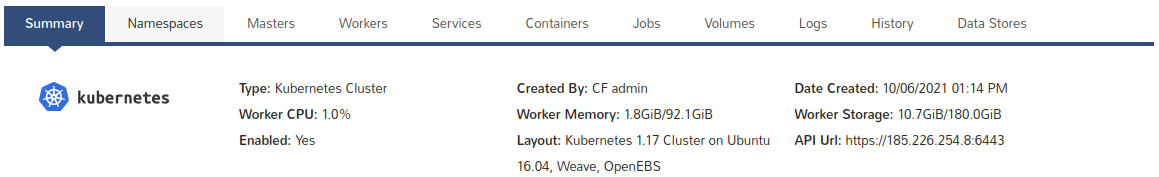

(...)You can find "public_api_url" in "API Url" filed in the cluster summary.

The edited file should look like this:

apiServer:

certSANs:

- "185.226.254.8"

extraArgs:

authorization-mode: Node,RBAC

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: k8s.gcr.io

kind: ClusterConfiguration

kubernetesVersion: v1.17.17

networking:

dnsDomain: cluster.local

podSubnet: 192.168.0.0/24

serviceSubnet: 10.96.0.0/12

scheduler: {}After editing, save the file.

Move the current certificates using the command below:

sudo mv /etc/kubernetes/pki/apiserver.{crt,key} ~After moving these files, they will be in your home directory as a backup.

Generate new certificates with the command:

sudo kubeadm init phase certs apiserver --config kubeadm.yamlThen restart the API server. For this purpose, you first need to get the ID of the docker responsible for such an operation.

sudo docker ps | grep kube-apiserver | grep -v pauseYou should get similar result:

sudo docker ps | grep kube-apiserver | grep -v pause

f68e2a3315c8 38db32e0f351 "kube-apiserver --ad…" 21 minutes ago Up 21 minutes k8s_kube-apiserver_kube-apiserver-wek-test-master_kube-system_1b2a06103553be1bc857c2903f082ca5_0With the ID you can restart API server. The needed ID is in the first column - in this case "f68e2a3315c8".

sudo docker kill f68e2a3315c8You can check if the container has been restarted:

sudo docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

bb05418294bc 38db32e0f351 "kube-apiserver --ad…" 4 seconds ago Up 3 seconds k8s_kube-apiserver_kube-apiserver-wek-test-master_kube-system_1b2a06103553be1bc857c2903f082ca5_1Access an external instance with the kubectl tool.

When using the default kube config to control cluster form an external machine, you will get a Time Out error or the following information:

kubectl --kubeconfig config get nodes

Unable to connect to the server: x509: certificate is valid for 10.96.0.1, 10.0.0.200, not 185.226.254.8You need to edit in the configuration file that is used by kubectl. The "server" variable with the private address should be changed to a public address.

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: <certificate>

server: https://10.0.0.200:6443

name: kubernetes

(...)Replace the address in the "server" variable with a public address from the "API Url" visible in the cluster summary.

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: <certificate>

server: https://185.226.254.8:6443

name: kubernetes

(...)After saving the edited file, you will be able to control the cluster from an external machine.

kubectl --kubeconfig config get nodes

NAME STATUS ROLES AGE VERSION

wek-test-master Ready master 21m v1.17.3

wek-test-worker-1 Ready <none> 16m v1.17.3

wek-test-worker-2 Ready <none> 16m v1.17.3

wek-test-worker-3 Ready <none> 16m v1.17.3